Revisited: Parallel Multipart Uploads to S3 in PHP

It's been exactly two years since I shared how we were handling large uploads to S3. Since then the code had worked without any…

It's been exactly two years since I shared how we were handling large uploads to S3. Since then the code had worked without any significant change until a few months ago when we have encountered a memory leak while uploading a large file to S3.

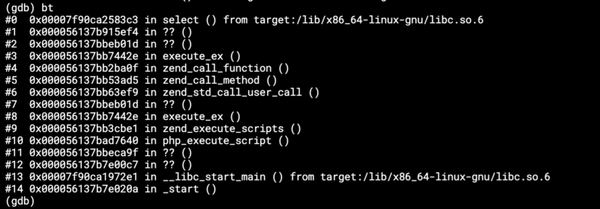

I spotted an error in the code that was responsible for the memory leak. The multipart uploads were retried infinitely and flooded the memory with retry promises, which was rare, but it did still happen multiple times. If I recall correctly the file was larger than 100 GB. It was time to rethink the uploader and take some further optimization steps:

- Limit the maximum number of retries. That definitely stops the memory leak, but it's hard to set the correct limit — too low and too many uploads will fail, too high and we may run out of memory again.

- Do not retry the whole multipart upload. It looked pretty difficult in the beginning, but digging deeper into AWS SDK for PHP revealed it was possible to get a list of rejected promises and get the state of each of the rejected uploads. The state is then used to resume the upload instead of reuploading the whole file. This leads to a significantly lower number of required retries to finish an upload.

- Set the number of parallel uploading threads. There is no magic to it, with a single file to upload, the concurrency is set to 20, with multiple files it is set to 5.

- Compress before an upload. I have made sure all files are compressed before they are uploaded. In most cases compression is faster than uploading. And you may save time on both ends — whatever is receiving the files from S3 will also be faster.

I have also introduced a major simplification. All files except zero-byte files are uploaded using multipart. The AWS documentation states thet the minimum size of a multipart upload is 5 MB and recommended size is at least 100 MB. But there is a small note in the docs: Each part must be at least 5 MB in size, except the last part. Well, if you only have one part, it's the last part and it can be smaller. I am ignoring the recommendations, but it works and for my use case where I have a random number of files of a random size, sticking to one upload method makes the code cleaner. Empty files, though, are uploaded using putObject and are not parallelized.

A simplified version of the code showing mainly how to obtain the state and resume an upload is here.

Happy resuming!