Parallel Multipart Uploads to S3 in PHP

Being a Keboola Connection developer has some benefits. Due to it's async/batch nature I don't have to worry about milliseconds or dive…

Being a Keboola Connection developer has some benefits. Due to it's async/batch nature I don't have to worry about milliseconds or dive deep into profiling very often. But moving big chunks of data has to be fast. The fastest you can make. Parallelized, fail-proof and stable.

A recent client of ours had to upload ~30GB of gzipped data into Keboola Connection every hour. It's by far the largest volume we had to ingest at this frequency.

The 30GB CSV payload was split into ~300 files, so we started playing with concatenating the files into a single large file and uploading it using multipart upload, where AWS SDK internally parallelizes the upload. Unfortunately the job didn't finish under the 60 minute mark, lots of the time was spent on concatenating the files.

Having the original 300 files would be also beneficial for loading the data into Snowflake or Redshift (way better than loading one single large file). A serialized multipart upload went over 60 minutes too. And this is where the fun began — we had to execute multiple parallel multipart uploads.

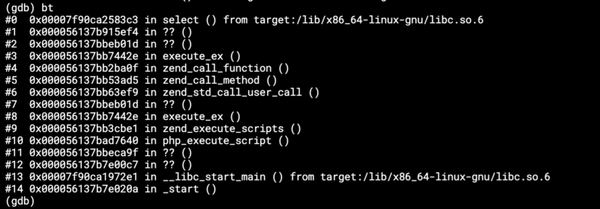

After spending few days in this uncharted area I came up with this piece.

There are several tricks in the code.

- Chunking — array of files is split into chunks so that all files are not processed at the same time, which would lead to too many open files errors.

- Array of promises — I used

\Aws\S3\MultipartUploaderto create a promise for each file and collect all promises from a processed chunk into an array;\GuzzleHttp\Promise\unwrapthen waits until all promises are finished. - Upload retries — any failed upload throws an

\Aws\Exception\MultipartUploadExceptionbut theunwrapfunction still waits for all promises to finish, so you get basically only the exception of the first failed upload. I need to iterate over the promises array and check state of each promise. If the promise state is rejected, then a new promise for the file is created and appended to the array and passed again to theunwrapfunction.

Finally some benchmarks. Using this approach we were able to upload 46 GB (300 CSVs) into S3 significantly under 10 minutes. Turning on server side encryption seems to slow down the upload to ~10 minutes. Uploading this file in a single piece into S3 using putObject took ~30 minutes.

If you want to see the whole shebang in production use, our Storage API PHP Client has the same bits and pieces and includes even S3 server side encryption.

If you know how to move files faster to S3, let me know!