Using Amazon Redshift to Analyze S3 Access Logs

We have recently encountered a higher number of the following S3 errors when uploading small files (~10MB) to S3:

We have recently encountered a higher number of the following S3 errors when uploading small files (~10MB) to S3:

<?xml version=”1.0" encoding=”UTF-8"?>

<Error>

<Code>RequestTimeout</Code>

<Message>Your socket connection to the server was not read from or written to within the timeout period. Idle connections will be closed.</Message>

<RequestId>627924E92F249664</RequestId>

<HostId>***</HostId>

</Error>Well, that does not say much. We're using a decently recent version (3.14.2) of AWS SDK PHP and are reassured by the repo maintainers that the problem is not caused by the SDK.

How to debug this issue?

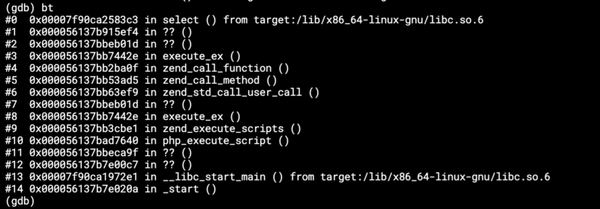

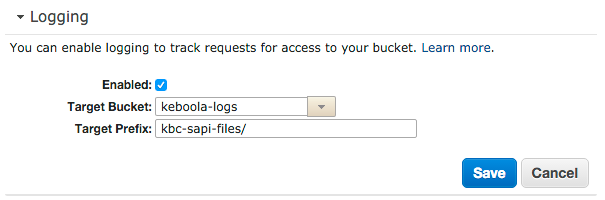

We have enabled S3 access logging, so we should have all logs at hand.

The first look at the logs doesn't tell us much. Each file in S3 contains multiple records, and the delivery of the logs might be delayed. That means I definitely need to load all of these files somewhere.

Dog-feeding didn't help me this time. We can easily extract data from S3 and store it into our storage for processing. However a space delimited CSV is something we haven't thought about, so it's not supported by our applications (although I created an item in our Productboard, so it might be addressed in the future). Now it's time to look somewhere else.

Sample log entry

148befa0ffaf90a6a7b7eef94aa52797b65e78f456d49b180954500b717cf69d kbc-sapi-files [31/Jan/2016:23:49:31 +0000] 52.21.230.94 arn:aws:iam::147946154733:user/keboola_clients_prod F68BDD82433D6652 REST.PUT.PART exp-30/***/***/170133313.csv0003_part_00 "PUT /kbc-sapi-files/exp-30/***/***/170133313.csv0003_part_00?partNumber=5&uploadId=*** HTTP/1.1" 200S3stat asks for read and write credentials to all our buckets. AWStats is even a server suite. Thanks, but no thanks. My thoughts returned back to the AWS stack. I have read some posts about AWS Firehose… that might take a bit to set up for the first time.

Finally, Martin came across this blogpost from AWS about analyzing ELB logs in Redshift. That looked fairly easy and it was very similar to my issue! I took the log file structure definition, connected DBeaver to one of our Redshift clusters and started creating a CREATE TABLE script. After a few iterations this has worked out. (Read this blogpost on how to launch and connect to a Redshift cluster)

CREATE TABLE s3_logs (

bucket_owner varchar(100) encode lzo,

bucket varchar(100) encode lzo,

"time" varchar(100) encode lzo,

"zone" varchar(100) encode lzo,

remote_ip varchar(100) encode lzo,

requester varchar(1000) encode lzo,

request_id varchar(100) encode lzo,

operation varchar(100) encode lzo,

"key" varchar(1000) encode lzo,

request_uri varchar(1000) encode lzo,

http_status varchar(100) encode lzo,

error_code varchar(100) encode lzo,

bytes_sent varchar(100) encode lzo,

object_size varchar(100) encode lzo,

total_time varchar(100) encode lzo,

turn_around_time varchar(100) encode lzo,

referrer varchar(1000) encode lzo,

user_agent varchar(1000) encode lzo,

version_id varchar(100) encode lzo

);You might wonder why i put time and zone into separate columns — I wasn't able to force Redshift to load space delimited data while using the log file date format which contains space ([06/Feb/2014:00:00:38 +0000]). And honestly, I didn't really try too hard. I also couldn't use total_time or turn_around_time as a number because the data contains dashes (data not available). So I ended up having everything as varchar. Simple, any enhancements are welcome!

Then I set up a new IAM user that has a read-only access to the log bucket and folder and used the COPY command to load the data into the table.

COPY

s3_logs

FROM

's3://keboola-logs/kbc-sapi-files/2016–02–08'

COMPUPDATE OFF

CREDENTIALS

'aws_access_key_id=***;aws_secret_access_key=***'

DELIMITER ' '

ACCEPTINVCHARS

REMOVEQUOTES

FILLRECORD

MAXERROR as 100000;Voila! One day worth of access logs loaded in ~4.5 minutes (~100k records). Here are some example queries I used to debug my issue.

SELECT * FROM s3_logs WHERE request_id = '627924E92F249664';

SELECT * FROM s3_logs WHERE "key" = 'exp-180/***/***.csv.gz' ORDER BY "time" ASC;

SELECT * FROM s3_logs WHERE "time" LIKE '[15/Feb/2016:07%' AND remote_ip = '54.85.18.69' ORDER BY "time" ASC;

SELECT * FROM s3_logs WHERE error_code = 'RequestTimeout' ORDER BY "time" DESC;If you have any problems loading the data using the COPY command, simply look into the stl_load_errors table.

SELECT * FROM stl_load_errors ORDER BY starttime DESC LIMIT 10;Now I have all the logs available in Redshift where they can be easily accessed and analyzed. And that's for it for now! If I manage to solve the issue, I'll let you know in another post :-).