Testing the untestable

An important part of Keboola Connection are Extractors — especially Database Extractors. These components load data from different (SQL)…

An important part of Keboola Connection are Extractors — especially Database Extractors. These components load data from different (SQL) databases and import them into Keboola Connection. They are in principle very simple — they execute an SQL query and export the result into a CSV file. As with all simple things, the devil is in the detail. One of those details is retrying on transient errors. Usually there is a substantial amount of data returned by the SQL query, so the export easily takes few hours and we don’t want short network interruptions to break it.

This is where the devil comes into the scene. There is a vast amount of reasons why the export can break. These can occur in various places and each database system and driver reports and handles them differently. Therefore we have implemented our own retry mechanism, only to discover that it doesn't work reliably. It’s actually one of quite common problems on our support line — that some error slips through the retry and causes the data load to fail. This is usually ‘just an annoyance’, because it’s enough to restart the import job. But even such a small annoyance can have a big business impact!. One day we discovered that the MySQL extractor ended successfully, but because of a network interruption it imported only part of the table. Then it became seriously, seriously bad.

The Hunt

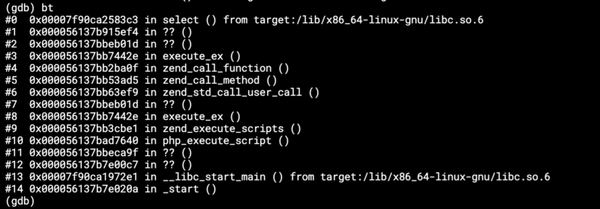

The first step was to try to replicate the bug in a controlled environment. From our logs, it was evident that the extractor was interrupted in the middle of downloading data. Which is basically (uses PHP PDO):

(Of course, for various reasons the above code is spread across different classes and methods.)

So I created an Amazon RDS instance, loaded a table with 10M rows into it, set up the extractor locally and added extra logging to it. Then I ran it locally and while it was downloading the data, I unplugged the network cable from my computer. After a few attempts I managed to get a successful run and only partially exported table. Bug confirmed, the way of the Cross ahead.

Side-note: What I described here is common for all our database extractors. However, I used MySQL to test everything, because MySQL is a huge PITA. It is without exaggeration the worst behaving database server ever built (ok, that was a bit of exaggeration), especially when it comes to error management. Practically all errors are masked under “MySQL server has gone away” (which I wish were true after what I’ve been through). That error is so common that it now has a dedicated page in the manual which lists the possible reasons. These range from a lost TCP packet to an incorrect query or overloaded server.

So when I confirmed that the bug exists, I continued with the hunt. I ran the entire thing in PhpStorm with a debugger, paused the PHP script in a certain place, disconnected the network and traced what happens. Eventually I found the reason. It was the following code (greatly simplified):

The actual retry mechanism is a lot more complicated, because we don’t want to retry on every \Throwable, we want to use exponential backoff for retries, etc. But the above code suffices to demonstrate the bug. In order of execution: connect to the database, prepare the query, execute it, fetch rows, if an error occurs, reconnect, repeat. The problem is that $stmt = $pdo->prepare() is outside of the retry loop. That itself is not a big problem, because the subtle setting ATTR_EMULATE_PREPARES means that there is no reasonable way to raise an exception in the prepare method. However, it also means that when the connection is re-established, the old query handle is used — that’s the $stmt variable. Now an (in)experienced developer might expect that calling $stmt->fetch() when $stmt is invalid would make PHP go haywire.

No, it simply returns false as if it were at the end of the statement result set (it is actually at the end, in the sense that it cannot get any more rows). No warnings or exceptions produced. Well, usually. Sometimes, you get “MySQL server has gone away”. But most of the time, it simply returns false when the connection is re-established. Nevertheless, the fix was reasonably easy:

Now comes the hard part: how to test this.

The Hunt for Test Part 1

Verifying that the fix was correct manually was quite easy. I ran the extraction locally, interrupted the network connection and checked the logs and result. Automating it seemed almost impossible at first.

First I was looking for less brutal ways of interrupting the network connection than unplugging the Ethernet cable. Disabling the network interface did the trick too, but still was quite brutal. Microsoft TCPView is even less brutal, because it allows me to kill just the single PHP-MySQL connection. Unfortunately, it cannot be used from the command line. But there is a similar tool CurrPorts, which allows to kill a single TCP connection from a command line. This allowed me to create my first attempt at a PHPUnit test:

The above test causes interruption when the extractor connects to the database, not when it fetches the data. So it doesn’t test for the fix. To actually interrupt during the fetch, it has to be something like this:

Where the killConnection.php is something like:

I need to introduce an X second sleep for the extractor to connect to the server, execute the query and start fetching. Only then the connection can be killed. The > /dev/null & part in calling the script is crucial. It makes the script execute asynchronously on the background so that the test can continue executing. The good news is that this test works. The bad news is that it is (obviously?) unstable. If the kill executes too early, the extraction is interrupted before the fetch (and the retry I want to test is not tested). If it executes too late, the fetch is completed before the kill and nothing is tested. However, with a table large enough and a little timeout fiddling, I was able to make this test to do the correct thing and pass in 9 out of 10 runs. Not perfect, but acceptable. This filled me with enormous optimism.

The Hunt for Test Part 2

The next step was to transfer my local development setup to the test (and production) environment. So I needed a replacement for the windows cports utility. The tcpkill from the dsniff package seemed to be the closest, so I replaced it.

And failed. The recommended way of terminating a TCP connection on *nix is to terminate the process associated with it — which is exactly what I don’t want to do. tcpkill works around this by listening to the TCP chatter and when it finds a matching packet, it sets a TCP RST. This means two things:

- It is not 100% reliable. Sometimes a few packets manage to slip through which is surprisingly enough to fetch data.

- It leads to a number of new and totally obscure MySQL errors. So instead of seeing “MySQL server has gone away” and “Empty row packet body”, I saw: “Error while sending QUERY packet” or “SQLSTATE[HY000] [2002] Connection refused” or “SQLSTATE[HY000]: General error: 1094”. This does not matter for the retry itself (that works regardless of the error message). It makes it really hard to set the test to verify that the correct retry was tested.

This caused the entire test to be even more unreliable. When I tried to create similar tests for testing the errors on PDO::query() and PDOStatement::execute(), the entire test-suite was so unreliable that it was mostly failing (or, even worse, erroneously passing). Back to the drawing board.

The Hunt for Test Part 3

When interrupting the connection physically seemed impossible, I was wondering if we can mimic the situation.

Thanks to having my testing server on AWS, I was able to supply the kill script with credentials with permission to restart the server. So I modified the kill script to something like this:

Side-note: because you can’t reboot an instance which is already rebooting, the script ended up much more complex than the above one.

Fired up the test, and realized that it works! Except that it doesn’t. When you restart the server, it’s (most of the time) available as a network endpoint, so the attempt to query it actually returns a different error: “SQLSTATE[HY000] [2002] Connection refused”. And to make matters worse, it is not bubbled from the PDO as the usual warning, but as \PDOException (see the next part). And to make matters even worse, it still sometimes ends with “MySQL server has gone away” (sometimes as a warning and sometimes as an exception). But only occasionally. Not enough to build (even remotely) reliable tests.

The Hunt for Test Part 4

Then it occurred to me that the test suite runs in docker, and I might actually return to a brutal network reset. That is, as long as it occurs inside the container in which the tests run. Unfortunately, the classical ifdown eth0 does not work inside a docker container. That is, not with a bridged network. I tried to replace the remote RDS instance with a local MySQL container, but then the entire thing does not work at all.

Although the containers are networked together, the connection behaves more like a local socket and it’s almost impossible to trigger the connection errors. I don’t have an exact explanation for this, but I have a hunch that the interruptions are so quick that the internal driver retry mechanisms can deal with them most of the time (however, sometimes it behaved like the table was simply empty, giving the “Query returned empty result” error). So I realized I would definitely need an external MySQL instance to test this, therefore I need a bridge connection, therefore I can’t take the entire eth0 down.

How about disconnecting the container from the bridge? Yes, that is possible using the docker network command. I can use docker network disconnect networkId hostId to kill the connection and docker network connect networkId hostId to bring it up. This would still suffer from the same timing issue as the first test, but that seemed to be acceptable. However, I need to run that command from within the PHPUnit tests. That means: create another docker image for testing (normally we use the same one for tests and production) with a docker client installed, mount the docker socket from host, make sure that the network and container have static names (or find them dynamically) and alter the killer script to kill the connection after a certain time and leave it down for a certain time. Because it proved to be all so fragile with the tcpkill journey, I actually started by writing tests for the killer itself.

This is pretty straightforward, except ErrorHandler::register(null, true). This is a very important step, because part of the entire problem is that PDO Error handling is ultimately broken. An (un)experienced developer might think, that setting PDO::ERRMODE_EXCEPTION will do what the documentation says:

PDO will throw a PDOException and set its properties to reflect the error code and error information.

That is very naive. In fact, PDO will throw an exception on a number of occasions, e.g. it will throw a PDOException “SQLSTATE[HY000] [2002] Connection refused”. But when it comes to the dreaded “MySQL server has gone away” error (I still wish this were true), it suddenly switches to PDO::ERRMODE_WARNING and only throws a PHP warning. And to make it worse, it does that only sometimes. That of course is a known bug, which received zero attention over the last 5 years.

Fortunately, I use the Symfony framework which uses an error handler to catch those warnings and turn them into an \ErrorException. Unfortunately, PHPUnit messes with this handler in tests (something I’ve already run into). It is possible to turn this feature off, but only for the entire PHPUnit run, not for a single test (or a part of a test). Already have the feeling like I’m hitting something constantly?

Luckilly, the Symfony ErrorHandler has a feature (almost undocumented) to replace the previous handler, so by calling \Symfony\Component\Debug\ErrorHandler::register(null, true) I can replace the PHPUnit error handler and keep the warnings converted to \ErrorException. Before I discovered this however, the test was a nonsense, because different things were happening inside the test and in the production code.

Ok, so I have a successful and stable test for interruption during \PDO::query(). Remember that this is not testing the actual code, it’s only testing whether I can reliably terminate the connection and detect in what phase of the code it was terminated. Time for a test for interruption during \PDOStatement()::execute():

A piece of cake. And now interruption during \PDOStatement::fetch() (that’s the original reason why I’ve started all this):

Straightforward. Except it doesn’t work. To be precise, it works in about 5 out of 10 runs. In 3 out of 10 it simply passes as if nothing happened. And in 2 out of 10 it freezes indefinitely (more than an hour to be precise).

What the hell?

The entire thing runs in a container. So I ran it, then created another shell in that container to precisely observe and match the calls to \PDOStatement:fetch() with the network interruption. I discovered the following behavior.

There is a fetch cache/buffer (whose existence is, to my knowledge, undocumented but logical), so when the network is interrupted, the calls to fetch() pass as if nothing had happened. Until the end of the buffer is reached. Then a new set of rows (in case of my tables, this is about 2–5 thousand rows) has to be physically fetched from the server. Then it becomes interesting.

- In case of some network errors (like those incurred by

tcpkill), it fails immediately. - In case of some other network errors (like “Network is unreachable”), it keeps retrying.

- And third, in case the connection is killed on the server (via the KILL command), it fails immediately.

The first scenario is out of question, because tcpkill proved to be unreliable. The non-exact nature of the network error caused the non-exact results of the tests — i.e. it is a different situation when the physical request is issued when the network is interrupted or when the network is being interrupted.

The second scenario is what caused the freezing. There is a MySQL setting for the net_read_timeout, net_retry_count and net_write_timeout, which together should make a 10 minute retry window. Unfortunately, the test was waiting much longer than that, and changing these configuration values had no effect. There is also a \PDO::ATTR_TIMEOUT, which also has no effect and deserves to be called a Red herring.

The only configuration value which had any effect was wait_timeout. The wait_timeout is the time for which a MySQL connection can be idle. When exceeded, the connection is killed. Disconnecting the network for e.g. 2 minutes makes the connection idle for 2 minutes, so if wait_timeout is set to 110 seconds, the connection gets killed.

So I tried using wait_timeout and fine tuning the sleeps, and the successful passes went away. The test successfully interrupted the connection during fetch 8–9 times out of 10 runs.

Except that in 1 out of 10 runs, it froze indefinitely.

Believe me, there is a happy end to this madness.

I was on the verge of leaving it like this and use PHPUnit @large annotation to forcefully terminate that test.

Then I remembered the third scenario — KILL the connection on the server — in which the fetch() gets terminated immediately (once it runs out of its buffer) and reliably.

The Hunt for Test Part 5

Terminating the connection via KILL command was always tempting and it always seemed impossible. One of the things which led me off track initially was that the entire thing does not work when testing against the local docker network (and that was exactly how I did my preliminary test). Now that I knew a reliable way of triggering the right error, I dug into it again.

Writing a test for this is dead easy:

But the kill mechanism is not right. I cannot randomly insert arbitrary SQL queries in the tested code. Killing the connection before the extraction runs won’t do either, because I need to kill it before specific functions are called and also the extractor is able to reconnect. If only there was a way to kill the connection just before \PDOStatement::fetch() is called.

Actually there is! \PDO::ATTR_STATEMENT_CLASS is the solution as it allows to use a different class to represent the statement result. Since both \PDO and \PDOStatement are PHP classes (albeit a little weird ones), they can be inherited and somewhat modified. Now I was able to create my own PDO:

and

and these are created using:

So that makes the PDO call the killConection() method of the test just right before it executes a particular function. The kill connection can be implemented like this:

The entire test class is available in our repository. And the final version of the dreaded \PDOStatement::fetch() kill test is:

And that, my dear readers, really works!

There are still a couple of hiccups on the way. For example, I have to use a different connection for killing (taintedPDO) and a different connection for executing (serviceConnection). One (obvious) reason is that otherwise I’m not able to tell if the kill was successful. And the second reason is that we use unbuffered queries, which do not allow running a query when a result set is open — ending with the error:

SQLSTATE[HY000]: General error: 2014 Cannot execute queries while other unbuffered queries are active. Consider using PDOStatement::fetchAll(). Alternatively, if your code is only ever going to run against mysql, you may enable query buffering by setting the PDO::MYSQL_ATTR_USE_BUFFERED_QUERY attribute.

Also the connections have to be created at the right times, otherwise I get the obvious error “SQLSTATE[HY000] [1040] Too many connections”. And then there are couple of minor issues e.g., injecting the whole taintedPDOthing into the Extractor class, which is supposed to create (and re-create) the connection itself. This lead me to use the PHPUnit magic method disableAutoReturnValueGeneration(). But I’ll keep that for next time.

That guy pretty much summarizes the entire article.