Eliminating Performance Issues with Azure AAD Pod Identity Using the Managed Mode

In this post, I would like to share with you why you should and how you can switch from the standard mode to the managed mode of AAD Pod Identity and how to do so without disrupting the service.

Setup

Some of the services in our Azure application must connect to Azure managed services, and we follow the best practice of using passwordless authentication. When we implemented this, we did so using AAD Pod Identity (https://github.com/Azure/aad-pod-identity).

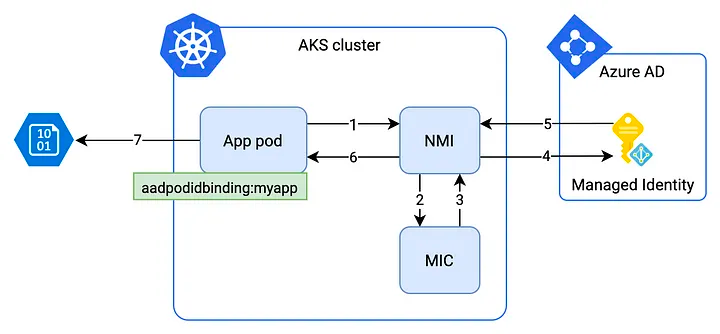

AAD Pod Identity has two modes, standard and managed. The standard mode is the easiest to use and is the default deployment type. It consists of two components: Managed Identity Controller (MIC) and Node Managed Identity (NMI). The MIC deployment checks the workload running on each node and assigns Azure Managed Identities to nodes as required. The NMI DaemonSet opens an identity metadata endpoint on each node that serves identity credentials to relevant pods if they find the correct assigned Azure Managed Identity on the node.

For more details about AAD Pod Identity, see

- https://itnext.io/the-right-way-of-accessing-azure-services-from-inside-your-azure-kubernetes-cluster-14a335767680

- https://azure.github.io/aad-pod-identity/docs/

Problem

If your application is running a significant amount of ephemeral workload with frequent pod creation and deletion, you may be experiencing issues with the standard mode of AAD Pod Identity. These issues can negatively impact performance, which can be a significant problem for your application. In this chapter, we will discuss the pitfalls of the current solution and explain why migrating to managed mode is a better option for applications that experience high levels of pod turnover.

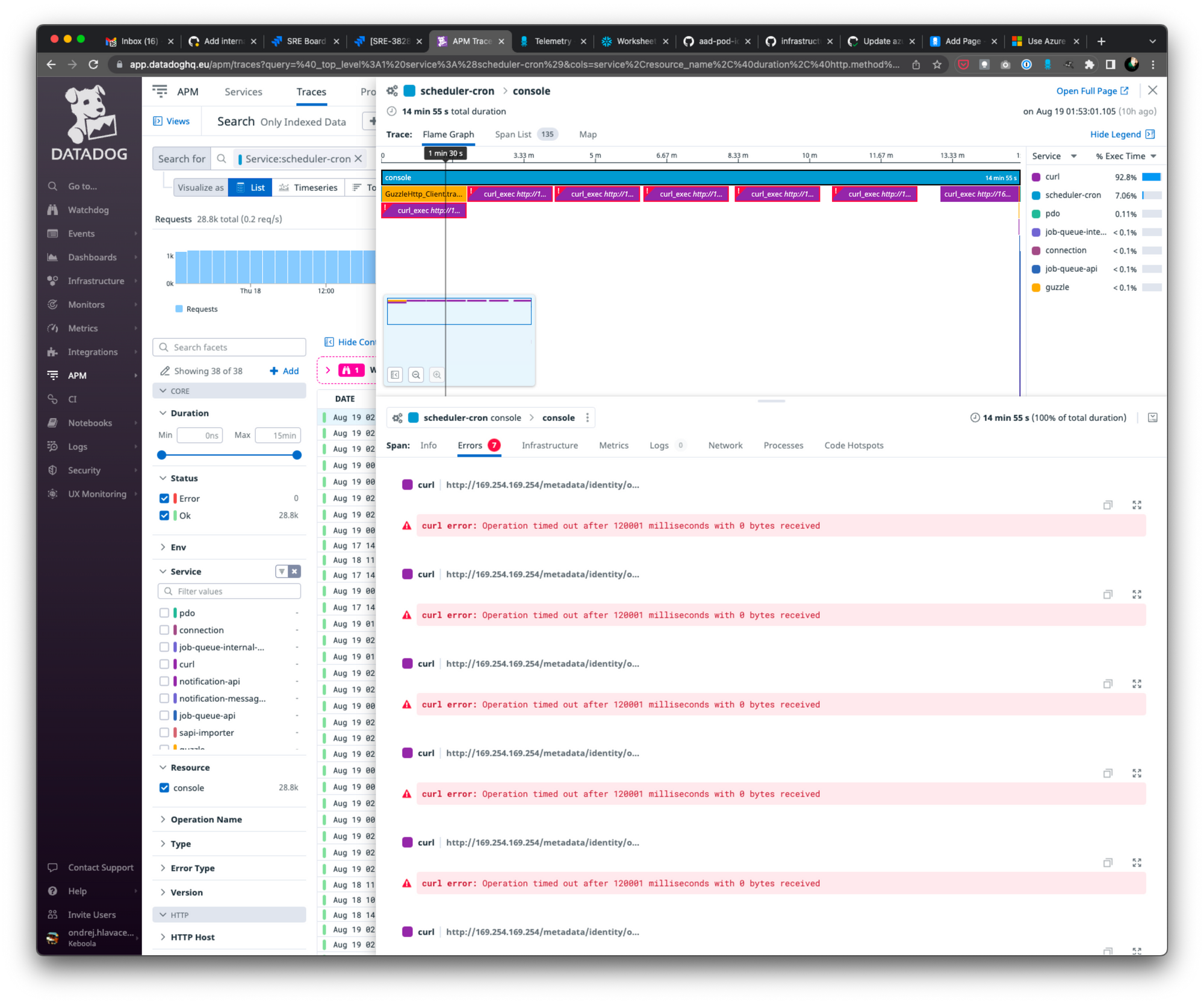

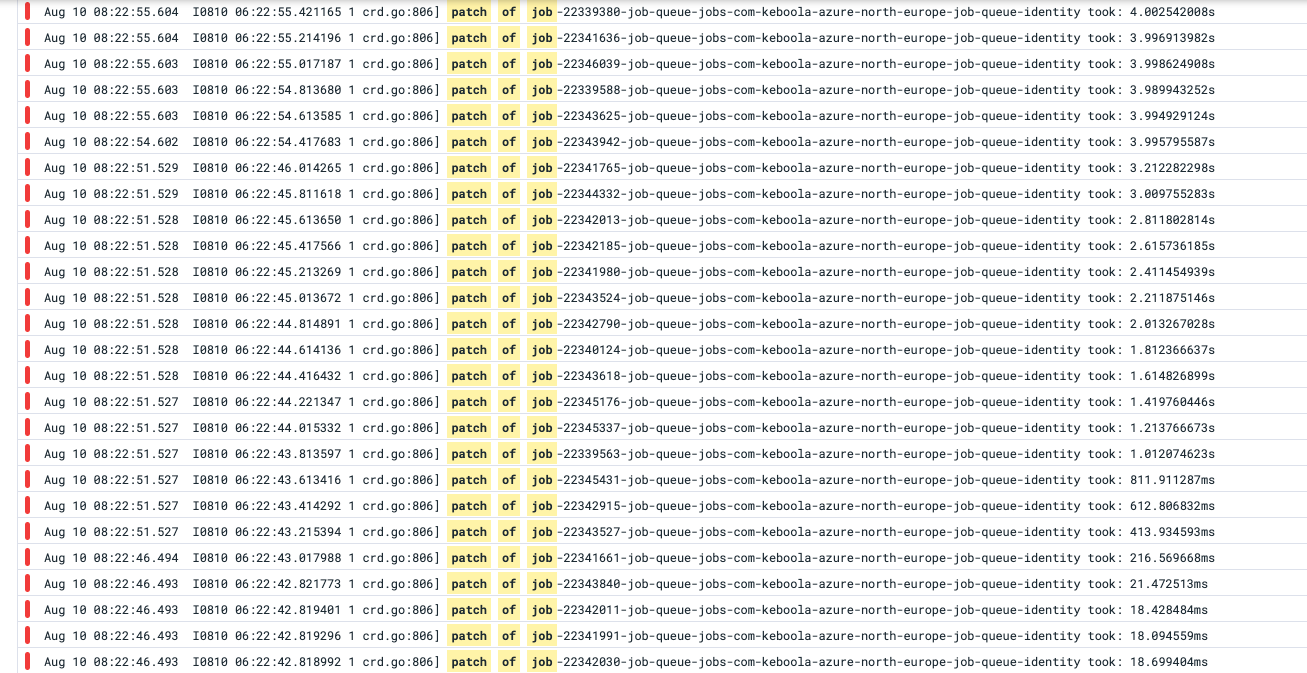

Our application runs many batch workloads, and as a result, we create and delete pods frequently—often hundreds or thousands per hour. This high level of activity keeps the MIC very busy, to the point where we have encountered a significant amount of Kubernetes API client-side throttling. This throttling has escalated to the point where even 120-second timeouts and a retry mechanism with a 15-minute timeout did not help.

The delays and throttling occurred because the MIC had to regularly check for deleted or added pods before it could assign identities to nodes. This was a blocking operation for the MIC. When there were a lot of pods to check, it generated a large number of requests on the Kubernetes API. The API then defended itself by throttling, which caused delays and timeouts. As a result, the blocking MIC assignment loop took a long time, during which all identity requests to the NMI endpoint had to wait.

Solution(s)

To resolve the issues caused by the long MIC blocking loop and client-side throttling, we tried several solutions. Initially, we decreased the frequency of the garbage collector process, which was deleting hundreds of pods per hour and triggering the blocking loop. We reduced the interval to 10 minutes, which improved the situation to some extent. However, this was just a temporary solution. Updating the identities of new pods took approximately 4 seconds each, which was too slow given the high volume we were processing.

As the traffic in our application kept increasing, we needed a more sustainable solution. We explored the documentation and raised the issue on the service's GitHub repository (https://github.com/Azure/aad-pod-identity/issues/1267). Eventually, we decided to switch to the managed mode of AAD Pod Identity, which eliminates the need for the MIC component. This meant that managed identities were no longer assigned to nodes on the fly, and we had to assign them manually. Since we were able to specify which nodes required identities and which ones did not, this assignment was relatively static and manageable.

However, we had to figure out how to implement this switch without disrupting the service, as the official documentation suggested a solution that would inherently cause an outage (https://azure.github.io/aad-pod-identity/docs/configure/standard_to_managed_mode/).

Implementation

The implementation, including the deployments to the testing and production clusters, took us around three MDs. Not great, not terrible.

1) Identify all identities and their node (pool) assignments

Due to the nature of MIC, we did not have to worry about what was assigned where since it all happened automatically. However, when getting rid of MIC, we had to conduct some code archaeology and create a table of identities and node pools to figure out what belonged where.

For each assignment, we attached the following information:

- Managed identity name

- Node pool name(s)

- Kubernetes namespace(s)

- Services using the managed identity (and their repositories)

- Test case (of each service) to verify that the assignment was successful

This list had 8 identities, 12 services, 5 node pools, 4 namespaces, and 12 test cases, all in 4 repositories.

2) Begin manual assignment of identities

Azure does not allow the declarative setting of identities, so the only option was to use the CLI. We created a bash function to handle the assignment of identities to node pools:

assign_identity_to_node_pool() {

RESOURCE_GROUP=$1

IDENTITY_NAME=$2

NODE_POOL_NAME=$3

IDENTITY_ID=$(az identity show \

--resource-group "$RESOURCE_GROUP" \

--name "$IDENTITY_NAME" \

--query id \

--output tsv)

VMSS_RG=$(az deployment group show \

--name kbc-aks \

--resource-group $RESOURCE_GROUP \

--query "properties.outputs.aksNodeResourceGroup.value" \

--output tsv)

VMSS_NAME=$(az vmss list \

--resource-group $VMSS_RG \

--query "[?tags.\"aks-managed-poolName\" == '$NODE_POOL_NAME'].name" \

--output tsv)

az vmss identity assign \

--resource-group $VMSS_RG \

--name $VMSS_NAME \

--identities $IDENTITY_ID

az vmss update-instances \

--resource-group $VMSS_RG \

--name $VMSS_NAME \

--instance-ids "*" \

--no-wait

}

Since the az vmss identity assign command does not assign identities to existing nodes, we had to follow it with the az vmss update-instances command. It was a bit tricky, but we managed to make it work.

We could then call the function with the command assign_identity_to_node_pool myAksResourceGroup myManagedIdentity nodePoolName.

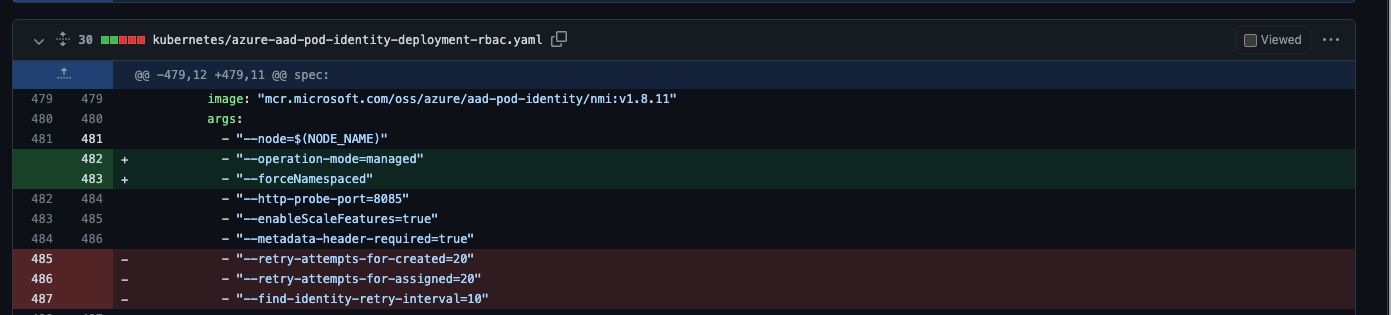

3) Switch NMI to managed mode

Switching NMI to managed mode was as simple as adding a new argument to the DaemonSet definition and removing some invalid arguments.

However, after the deployment, we still had to ensure that the test cases were passing. As a result, we discovered that the MIC was still running and that identities were being assigned to nodes by the MIC instead of our code.

4) Scale down MIC replicas

Before deleting the resource entirely, it's good practice to scale down the replicas to zero first, which makes it easier to recover if there is an emergency.

Afterward, we ran the test cases again. Next, we drained a node from each node pool to obtain a new node that had not historically been assigned an identity from the MIC. We ensured that the node was assigned an identity using az vmss identity assign, and then we ran the test cases on the new node(s) once again.

Check.

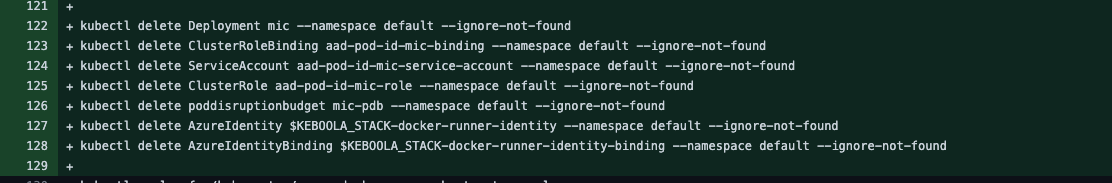

5) Clean up MIC

- Delete the MIC deployment and the PDB.

- Delete all resources related to the MIC (service accounts, roles, …).

- Delete all identities created in K8s.

With all these changes implemented, we began running smoothly with AAD Pod Identity in managed mode, and all our previous issues were resolved. However, we are still limited by the manual assignment of identities, so any new identity must be manually assigned to the respective node pool first, which can be a hassle. We're eagerly awaiting the time when support for managed identities becomes available in Azure Workload Identity, which will hopefully make this process much more automated.